Auth0 Machine to Machine token caching

Overview

Auth0's Machine-to-Machine (M2M) applications are commonly used on integrations between external vendors/apps/utilities and Auth0 itself, like the Progressive Profilling used in the Signup Process or the Terraforn Auth0 Provider.

We've done a spike that works nicely with external vendors, which can be reviewed here.

This M2M applications make use of JWT Tokens, so a client can request access to resources via the JWT, which is retrieved by exchanging its client_credentials.

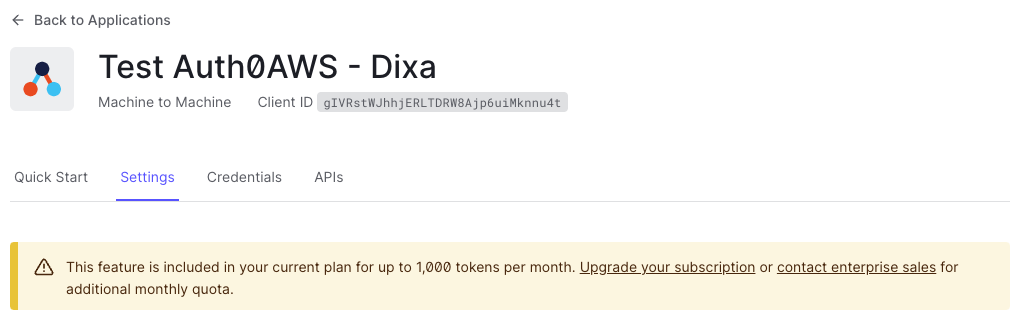

Known issues: Token limited quota

Auth0 has a limited quota on the number of JWT tokens that can be generated; the free tier allows up to 1k a month, and that can be upgraded as required.

There's a downside to Auth0's implementation though and it's related to the non-cached/maintained generation of these tokens. Every request sent by a client will always generate a new JWT token, regardless of the expiration time configured on Auth0's Application settings.

This is not good enough for external vendors like the ones exposed on the spike mentioned at the beginning of this document, where we can't guarantee that the vendor's client-side implementation will maintain tokens until the expiration of these.

Not only that, the token quota is a shared pool that affects all clients, since there's not a rate-limit attribute per application. In other words, one single vendor can block the rest of them if the client-side implementation is not efficient.

In the worse case scenario, vendors will consume our Auth0 Token Quota and we can easily run out of availability for M2M applications.

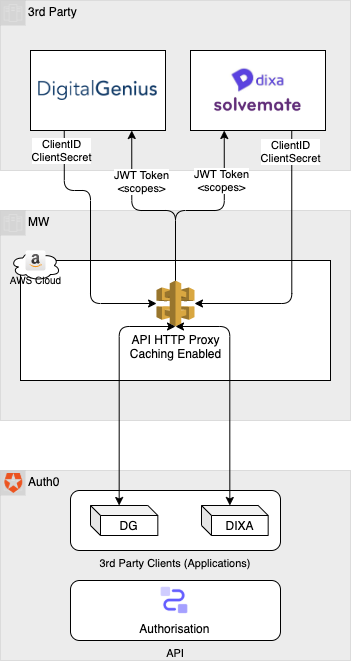

Spike: Use of AWS Api Gateway as a caching mechanism

Rather than implementing a solution that maps tokens & clients from Auth0 into a redis-like solution (by using Auth0's client credentials customisation, see also the following webhook), a simpler solution could use API Gateway's caching features. This can help to mitigate the consumption of the token quota, but would only work OK with a very reduce number of vendors or with an average number of requests (not pulling data very frequently).

In this case, we could use API Gateway as a HTTP Proxy between vendors & Auth0, where we can enable caching, using the body as the key in the cache map.

Caching details

- A standard capacity of 0.5Gb seems to be more than enough to hold the size of Auth0's responses (1.9Kb).

- In case the number of vendors increases notoriously, AWS uses LRU eviction strategy, which fits great with the purpose of this spike. A bad client implementation will benefict from this feature.

- AWS API allows a maximum TTL of 3600s. At this moment, if we consider only 2 vendors using this integration, that would consume up to 48 tokens a day, which is an average of 1400 tokens a month. Auth0's free tier M2M token quota is up to 1.000, our current enterprise tenant subscription gives us up to 5.000.

- TTL does not get renewed per key request in any case, so

CacheHitsandCacheMissdon't affect the time a key can persist in the stage caching. (TTL dictates the invalidation of a key, unless there's a cache-flush or a client key-invalidation)

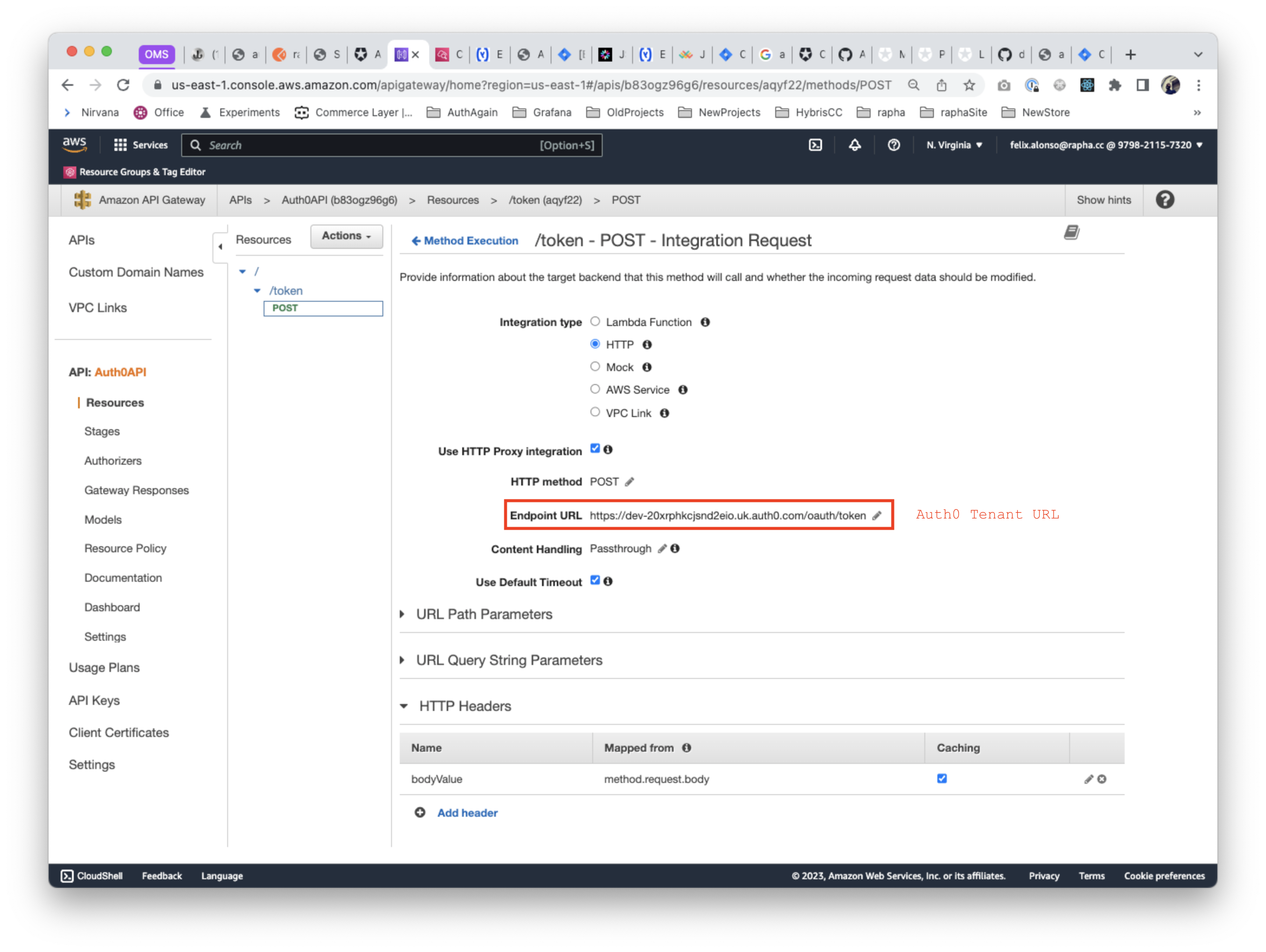

Configuring AWS API

In order to enable the caching in a POST request, first we'd need to modify its Integration Request, and configuring the HTTP Headers, adding the body as a cacheable item, based on the method.request.body.

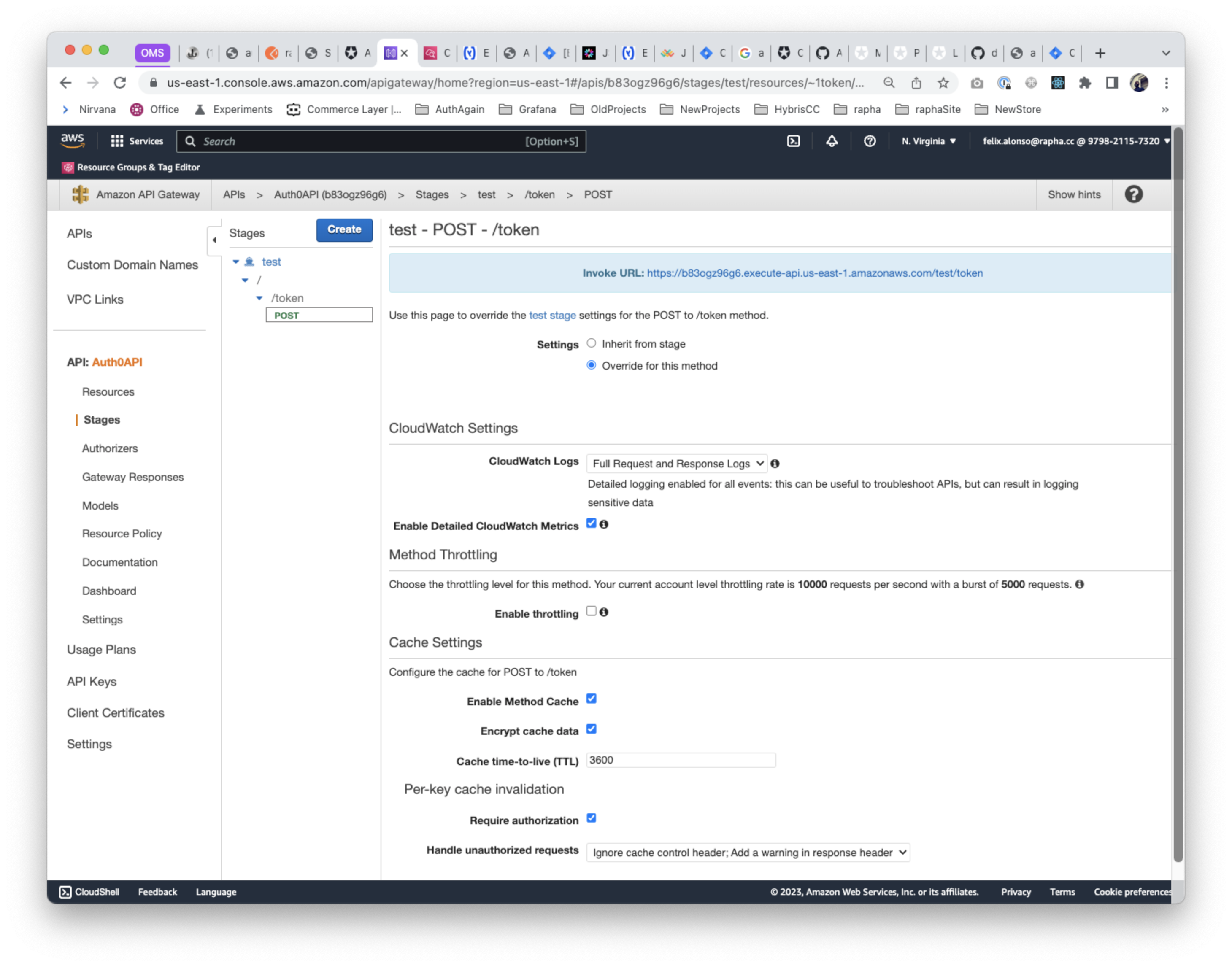

The second step is to override the POST method on the required Stage, configuring the precise TTL desired