Microservice - Combining RESTful HTTP with Asynchronous and Event-Driven Microservices

Overview

By its nature, a microservice platform is a distributed system running on multiple processes or services, across multiple servers or hosts. Building a successful platform on top of microservices requires integrating these disparate services and systems to produce a unified set of functionality.

As integrations needs are not always the same then we cannot have a single communication style to solve all problems. The aim of this document is to help us decide; when and why do we use different communication standards?

Communication Types

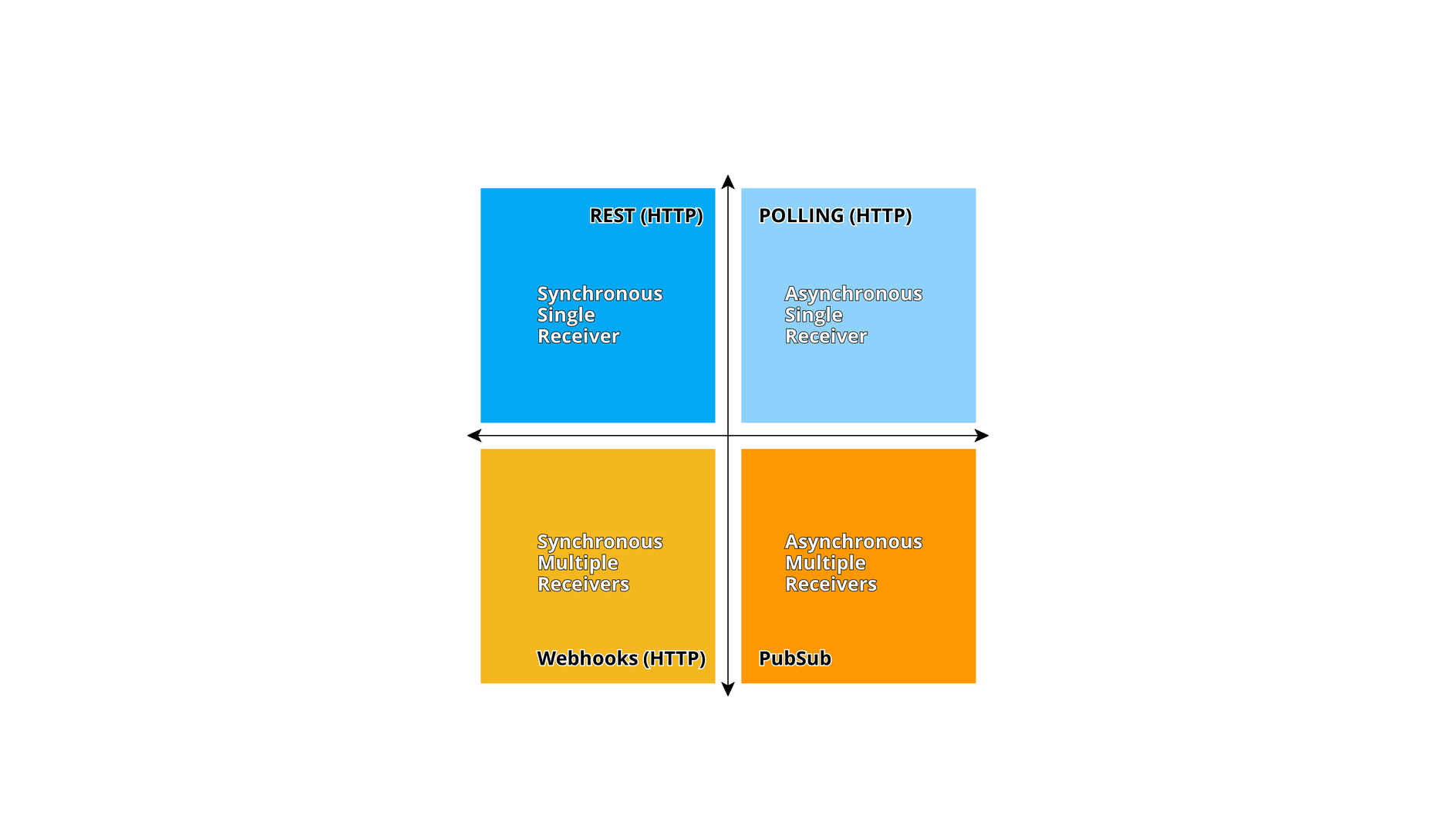

We can classify the available communication types along two axis. The first defines synchronous verses asynchronous. The second axis defines the number of receivers or clients of the message. The below diagram gives us a quick overview of what available communication types and when they should be used.

Service Independence

The communication type may not be the most important concern when building microservices. What is important is being able to integrate while maintaining the independence of microservices. Rather than worrying about which communication type to use, strive to minimize both the breadth and depth of communication between microservices altogether. The fewer communications between microservices, the better.

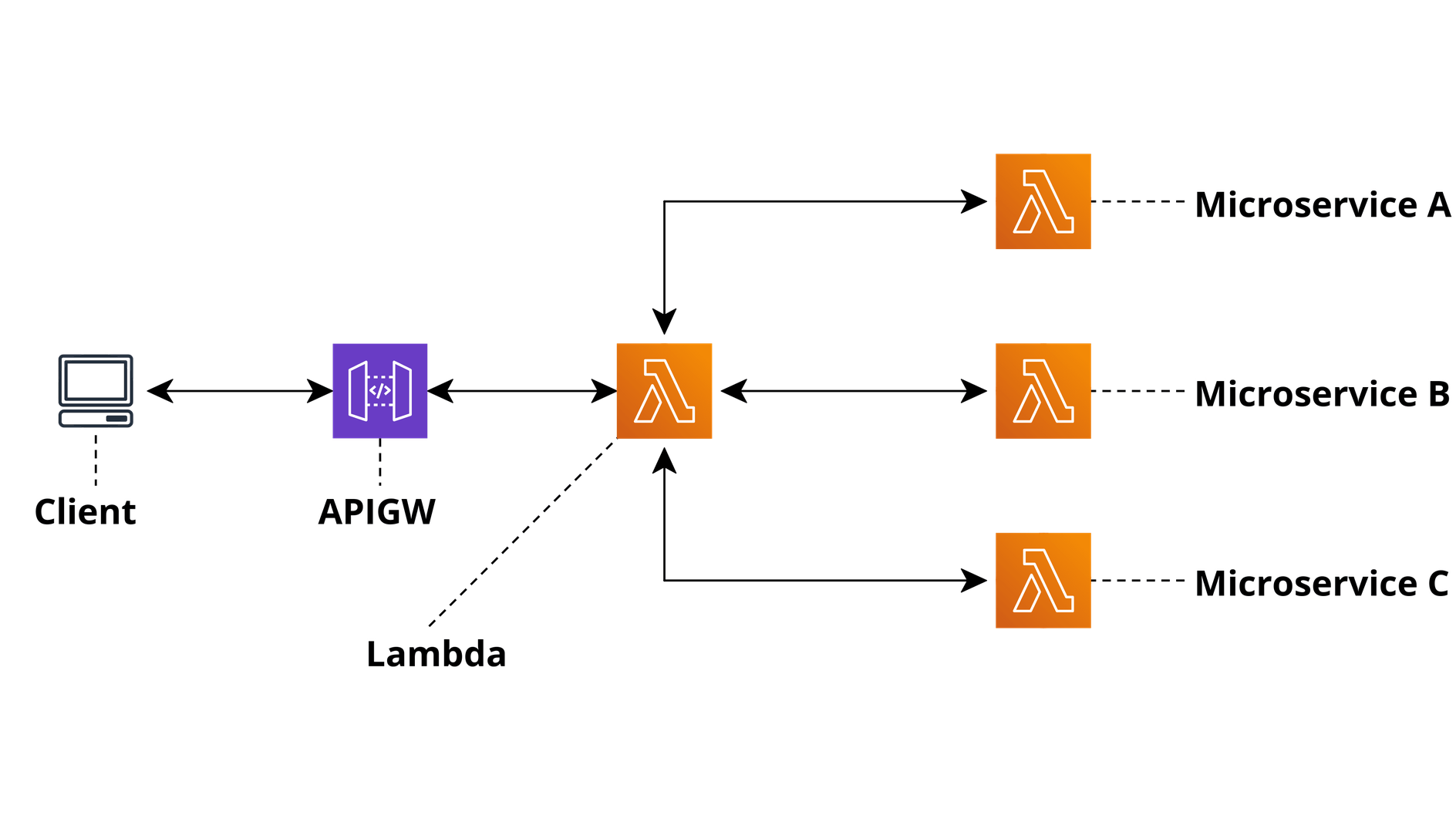

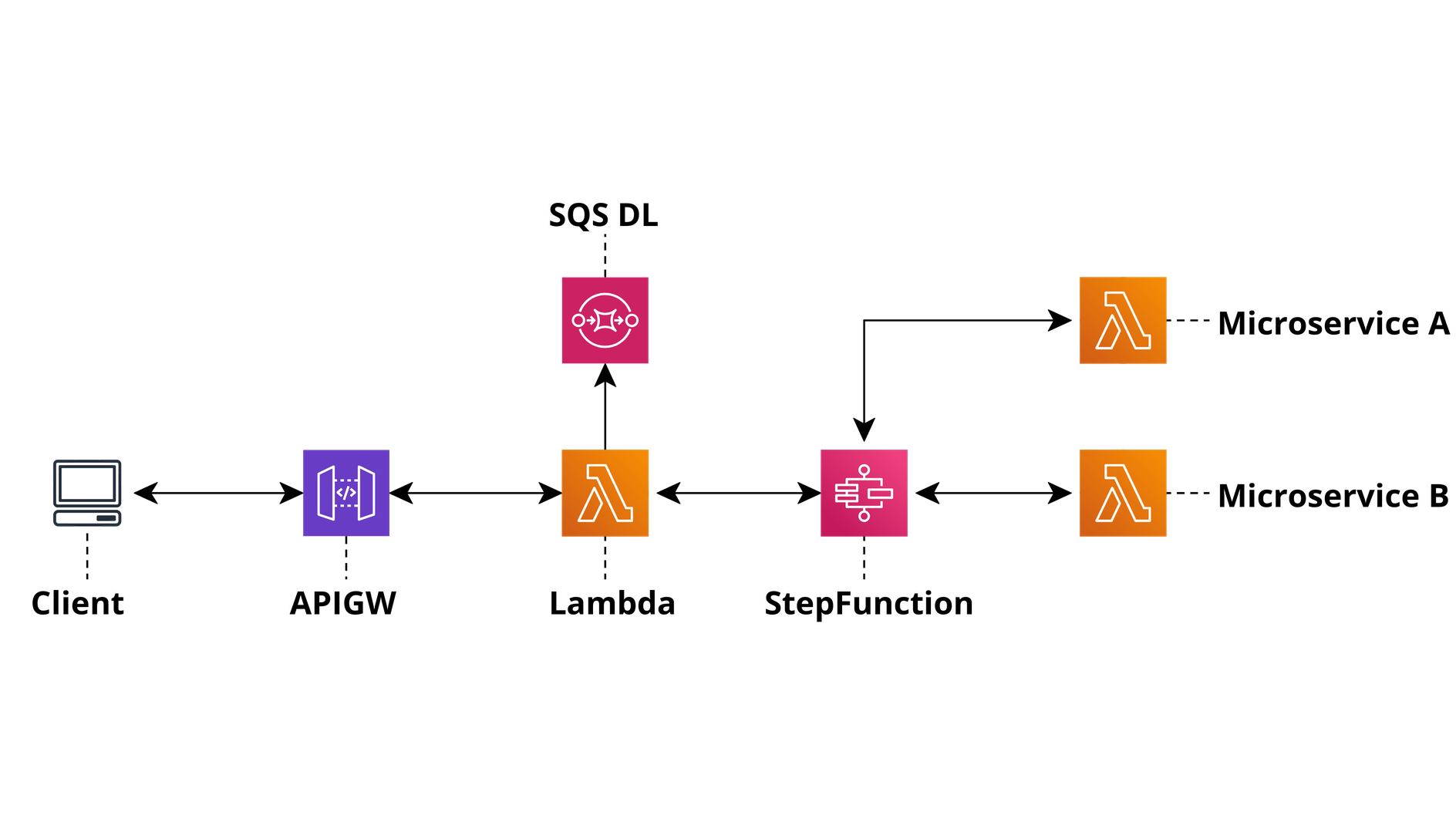

When you do need to communicate, the critical rule to follow is to avoid chaining multiple synchronous calls between microservices. This just means that the communication between microservices should be done by propagating data in parallel, preferably asynchronously, rather than in a synchronous series. As we are well aware chaining synchronous API calls is an anti-pattern as it introduces multiple points of failure as well as failure along the line of communication. If we refer to the Serverless Microservice Patterns documentation we can see that the API Gateway / Internal API, Aggregator and State Machine patterns would all fail as calls are chained.

Prefer Asynchronous Communication

RESTful HTTP tend to promote encapsulation by hiding the data structure of a service behind a well-defined API, eliminating the need for a large shared data structure between teams. Unfortunately, relying completely on RESTful HTTP means applications are still fairly tightly coupled together — the remote calls between services tend to tie the different systems into an ever-growing knot. It also means we have a single source of failure on the way into our Middleware.

Combining Asynchronous Communication with a RESTful Gateway

At the start of this document we touched upon it being naive to think that one communication style will satisfy all integration needs. A great example that is an important part of many companies’ strategy: RESTful HTTP APIs. REST is one of the most omnipresent and widely used communication protocols in the world which makes it a key part of most organisations initiatives.

If we prefer asynchronous communication, but require RESTful HTTP, how do we combine them?

My suggestion is to leverage RESTful HTTP at the boundaries to our system using an API Gateway. RESTful HTTP-based APIs serve as the entry point and interaction point between logical boundaries within the system. These APIs should be well-defined, clearly documented, stable, and suitable for both internal and external use.

SQS

I wanted to touch on SQS as we use it heavily when delivering information between our internal systems. When we integrate our internal systems, it is the services job to deliver that information. It is the service’s job to consume it. Queues are a detail of the consumer.

In our case, a queue acts as a buffer between our middleware and the API that has been exposed by one of our systems and the service that processes the data. The queue helps to smooth intermittent heavy loads that can cause the service to fail or the task to time out. This can help to minimize the impact of peaks in demand on availability and responsiveness for both the API and the system. The queue decouples the tasks from the service, and the system can handle the messages at its own pace regardless of the volume of requests from concurrent tasks. Leveraging a queue to help with message consumption is a great example of leveraging asynchronous design that also helps a system scale to process multiple messages concurrently, optimising throughput, improving scalability and availability, and balancing the workload.

EventBridge

In an event-driven system, events are delivered in near real time, so consumers can respond immediately to events as they occur. Producers are decoupled from consumers — a producer doesn’t know which consumers are listening. Consumers are also decoupled from each other, and every consumer sees all of the events. This scenario differs slightly from queueing, where consumers pull messages from a queue and a message is processed by a single consumer.

- Event-driven systems are best used in few different scenarios:

- You have multiple consumers that each must process the same set of events. An example of this is Nav and Exponea both needing to - consume transaction data.

- You have a need for real-time processing with minimum time lag.

- You have a complex event processing, such as pattern matching or aggregation.

- You are building an event sourced application where events, rather than data, are the source of truth for application state.

Practical Guide

So far we have covered synchronous communication using REST and asynchronous communication using an event bus and queueing. We also talked about how, in practice, you need more than one of these communication types to build a full-featured service.

The below example begins by developing a single microservice and later creating multiple services as complexity increases.

A Simple RESTful Service

The starting point to thinking about communication is beginning with a single service. In this case, the best path forward is to create a simple RESTful API that matches our external API standards and use that as the API for our service.

Multiple Services

As our service grows, we may choose to split our RESTful service into multiple microservices. We now have a distributed system! This means we have to prepare for an additional set of challenges and we should begin favouring asynchronous communication between services. Going fully asynchronous limits our ability to release third-party APIs so the correct approach here is to keep our RESTful API we created in our single service and use that as a frontend into the boundary of our system. As far as developers outside of our squad are concerned, the only API to our system is our RESTful frontend and our event stream — exactly as though it was a single service.

Within our system boundary, we may choose to organise our microservices in ways that make sense to our team. The key thing to keep in mind is to maintain a stable frontend API into our system — strive to avoid leaking internal details as much as possible.

The event stream we have in place still gives other squads a way to asynchronously receive updates to any application state.

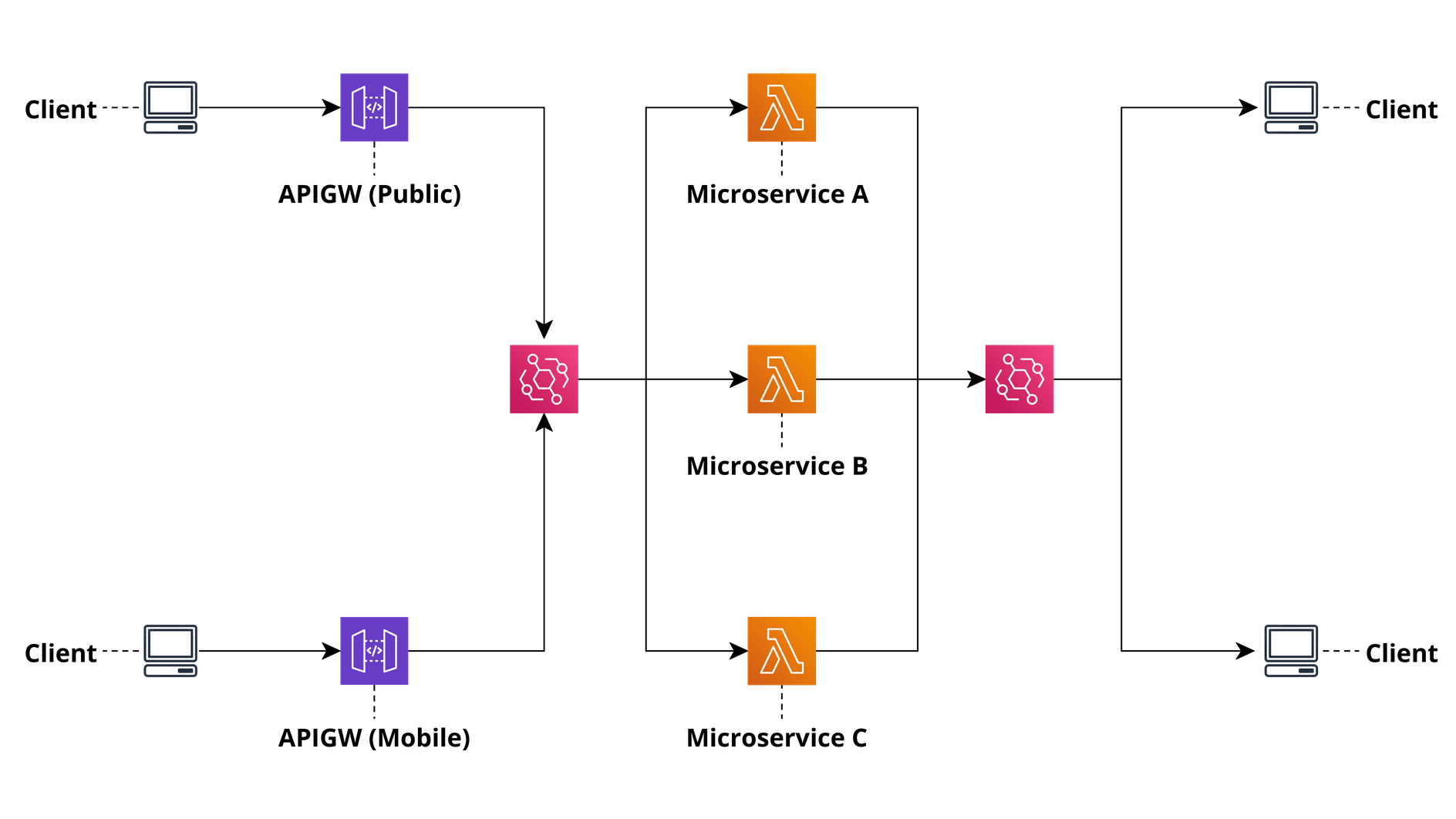

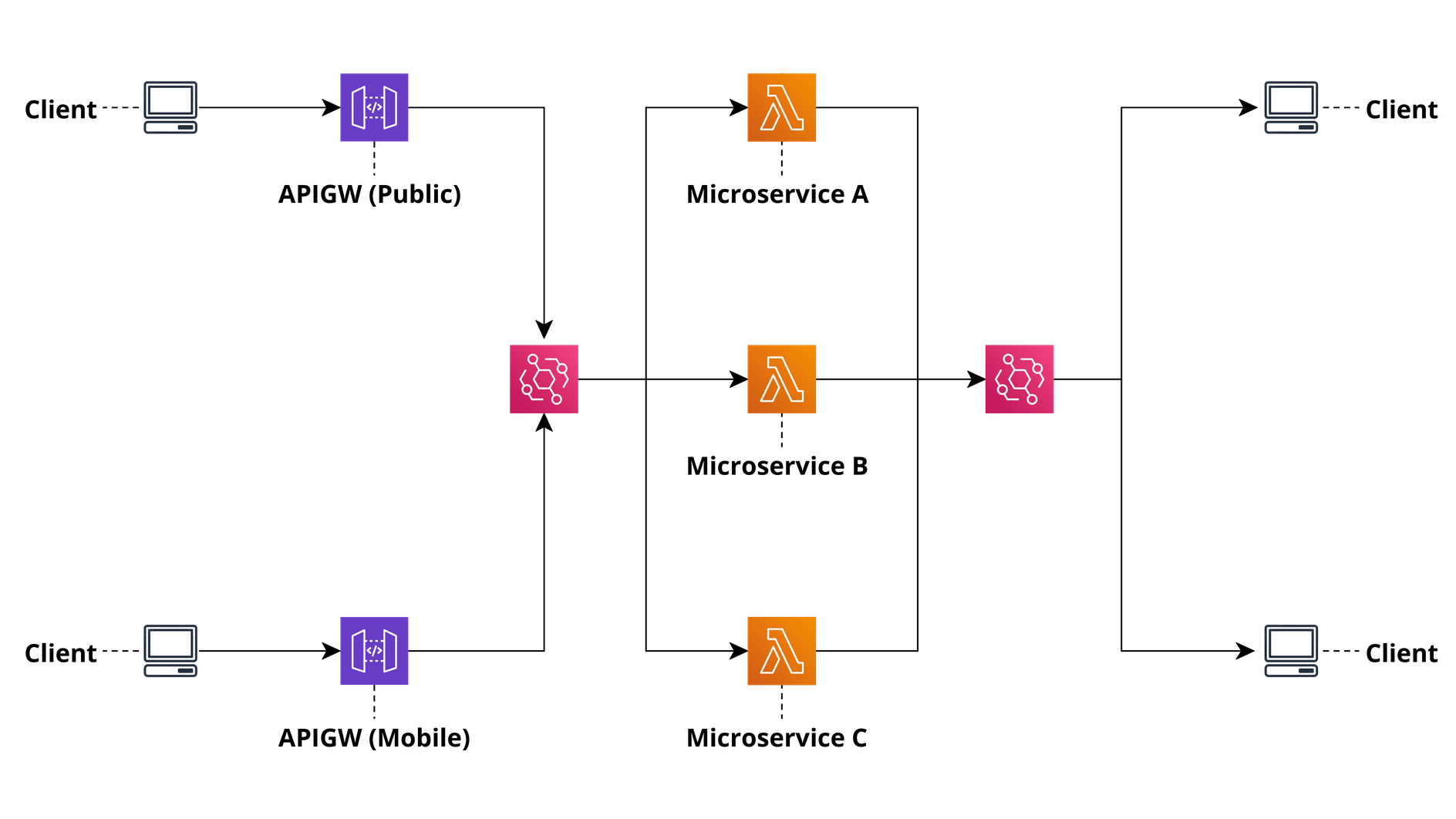

Multiple Consumers

Although we have a nice RESTful API, it is unlikely the same API will be useful to the browser, other backend services, third-party customers, and a mobile application all at the same time. If we are faced with multiple users with different integration needs, we can add additional API frontends that maintain the boundary between our system and external consumers.

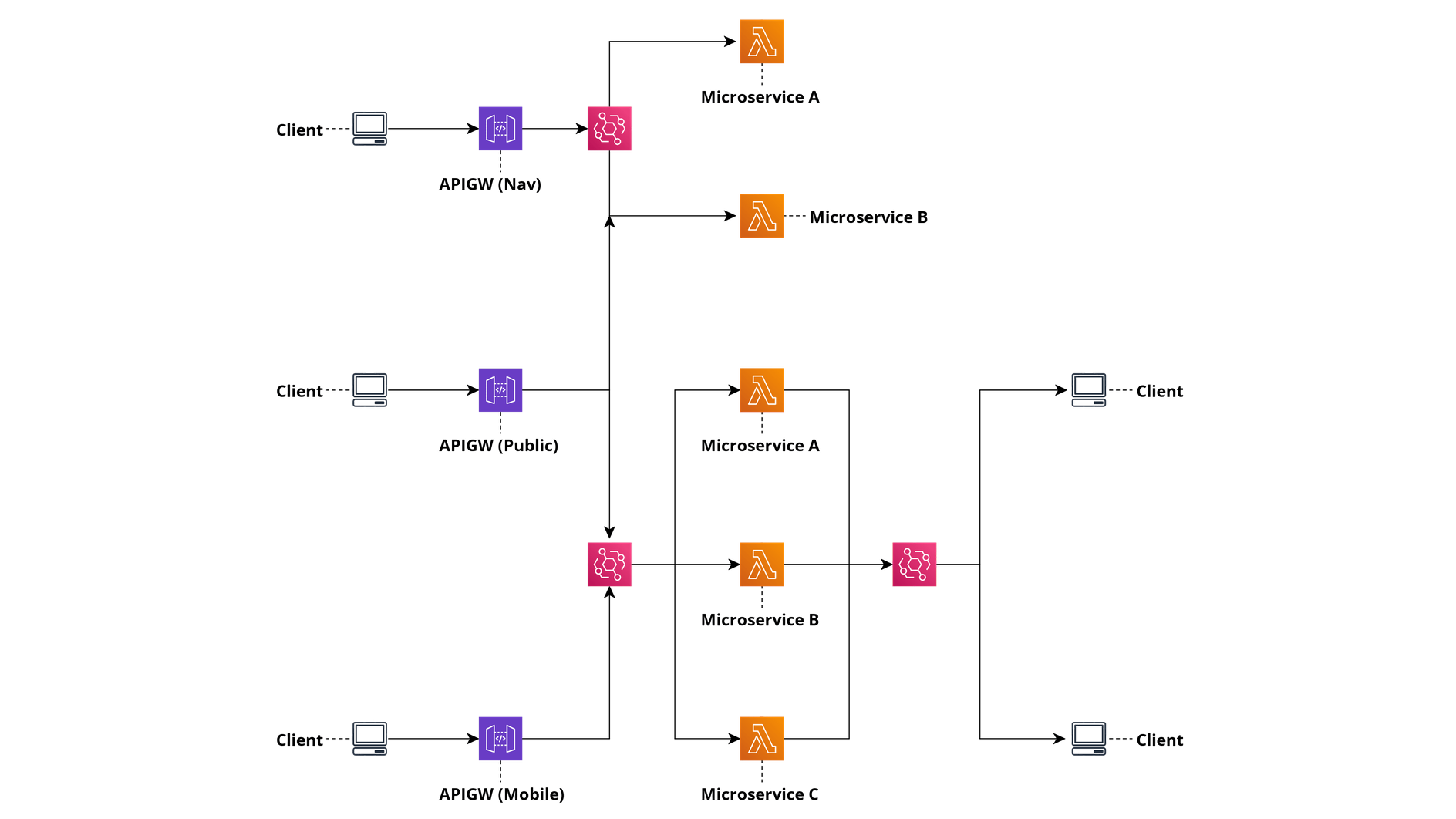

Building a Composable Platform

I’ve framed our short example in the context of a team building up a set of services to address their specific problems by using a RESTful API frontend coupled with an asynchronous event stream as the interface to their system. This interface is independent from the number and configuration of individual microservices within the boundary of the system. As far as outside consumers are concerned, the system is a RESTful API and a series of events. Internal to the team, the choice to use a queue, or an asynchronous message bus should be treated mostly as an implementation detail. The guidance suggested here is to favour asynchronous communication, where that is not possible to favour RESTful APIs so that we are best positioned to meet the needs of third-party customers, and to treat queues as an implementation detail to help address availability and scale and not as an API for others.

The following image shows how two systems can be composed leveraging their API frontends and events to integrate between them.

Summary

With each team addressing the APIs to their system in this way, we can start to build up a composable platform of RESTful APIs and Event Streams that a good set of architecture principles:

- No Single point of failure. Queues and/or topics are localised to a bounded context instead of using a global messaging bus. This also allows for a scalable architecture using z-scaling without having to replicate our entire system.

- Isolate faults. By separating private details into your local system boundary, any faults are localised to the bounded context, limiting the blast radius for failure.

- Asynchronous design. This design supports an asynchronous and event-driven design.

- Stateless. API Frontends (also called Backends for Frontends or BFFs) are naturally stateless and scalable using. Backends for Frontends can be combined with scalable, stateless workers in Competing Consumer or Queue-Based load leveling pattern.

- Scale out, not up. BFFs and queue-based load levelling are naturally scalable. Splitting RPC traffic out of a global NATS allows our organisation to scale out more effectively than relying on scaling up usage of NATS indefinitely.

- Think external first. BFFs at each edge of a Bounded Context support third-party APIs. APIs you expose to others are designed intentionally and support open standards.’